Hi, I’m Steven.

I create software solutions that utilize emerging technologies to strengthen how people work and collaborate.

About Me

With a unique blend of experience in R&D, sales, design, and software development, I work closely with customers to explore how technology can transform their businesses and create the software that brings those visions to life.

My background spans both technical expertise in software development and business development skills, enabling me to bridge the gap between innovation and practical application.

With over eight years in the technology and innovation space, I’ve been able to:

Delivered more than 10+ custom software solutions to clients.

Presented and demoed at over 20+ industry and academic conferences.

Contributed to academic research that has received more than 90+ citations.

Currently

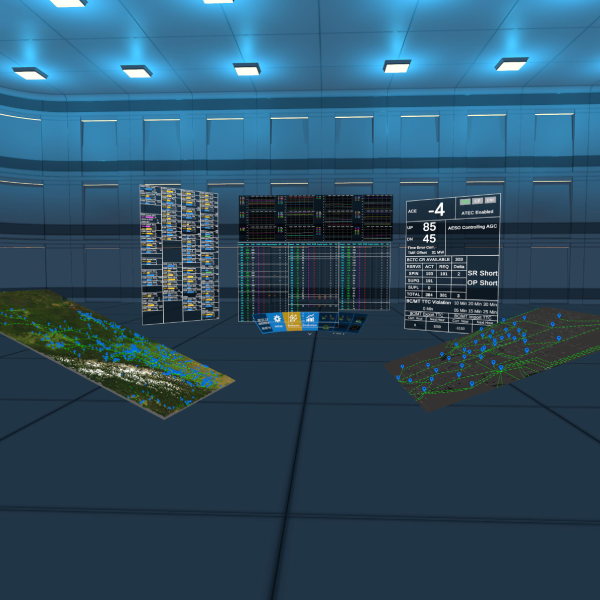

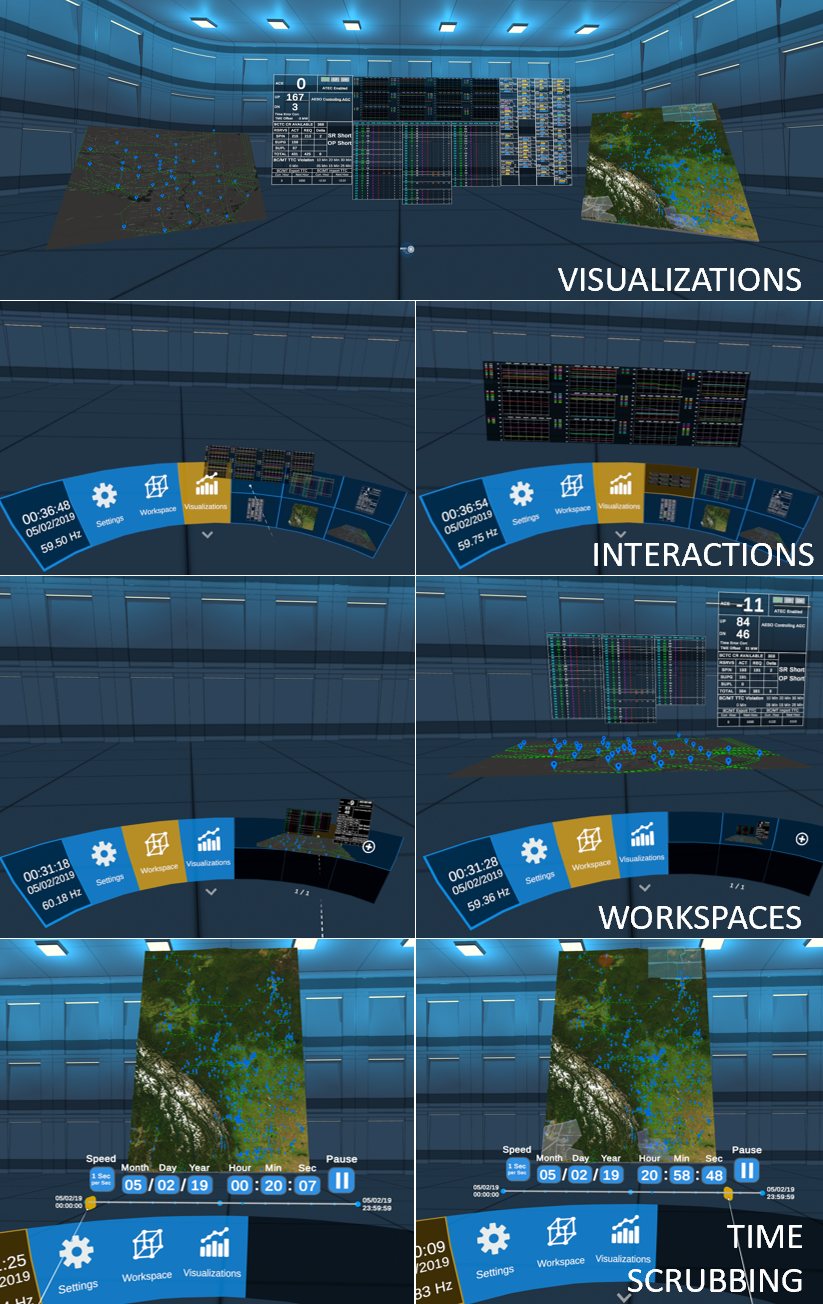

VizworX delivers software solutions that work to solve business challenges for enterprises by leveraging advanced technologies to simplify complex information and enhancing decision-making.

At VizworX, I serve as Lead Solutions Engineer, overseeing the full lifecycle of XR, web, and cross-platform projects. I guide cross-functional teams from requirements through deployment, acting as the primary technical liaison to translate client needs into scalable, high-performance solutions. I also collaborate with business development on pre-sales support, solution architecture, product demos, and new opportunities.

Drawing on my previous role at VizworX as a Lead Software Developer, where I delivered high-quality digital experiences for a diverse range of clients, I bring deep technical expertise that informs and strengthens my current leadership role.

Over the years, I’ve led transformative projects that helped clients enhance their digital capabilities while also driving the creation of VizworX products now deployed and making an impact across multiple organizations.

Previously

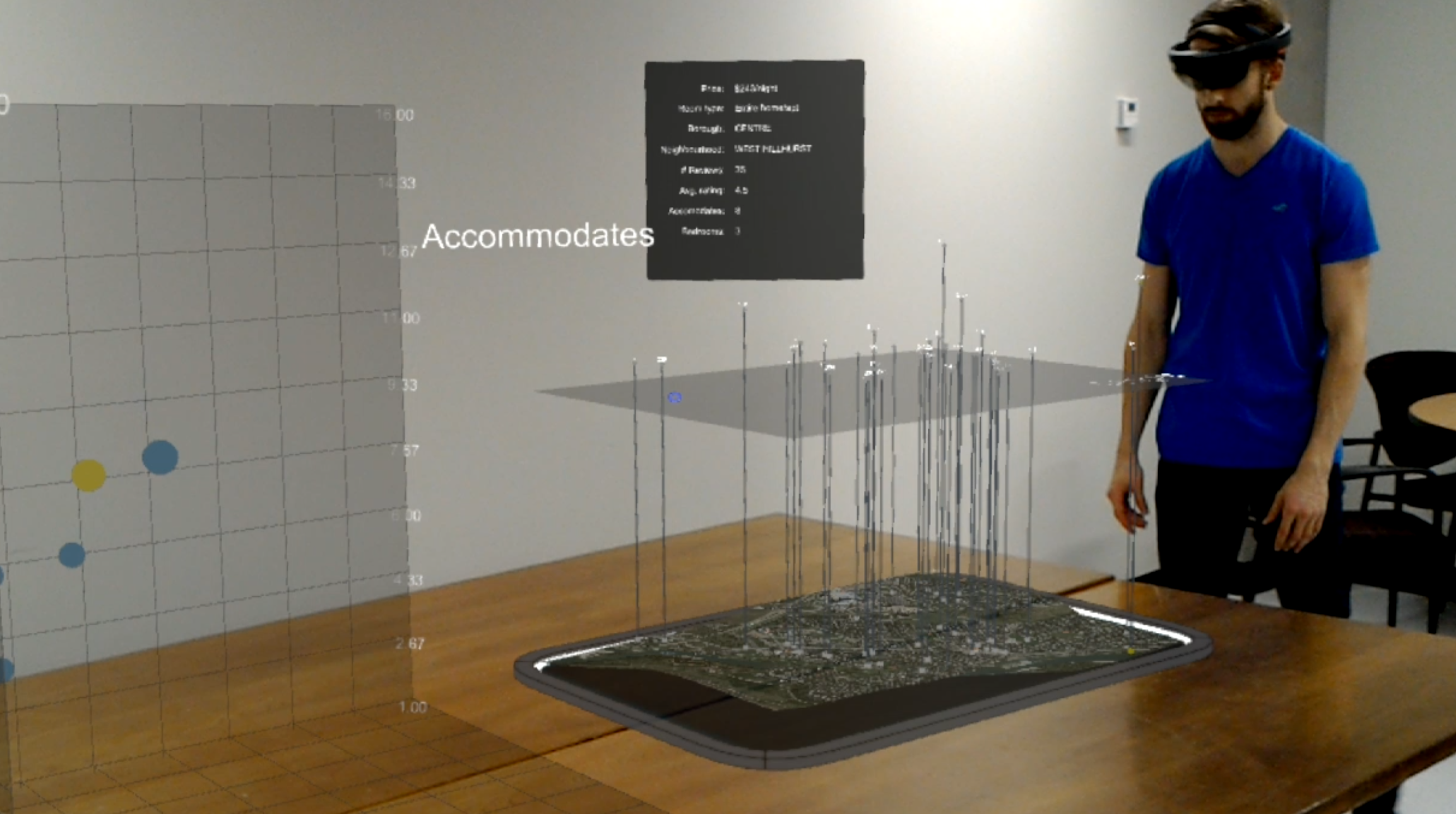

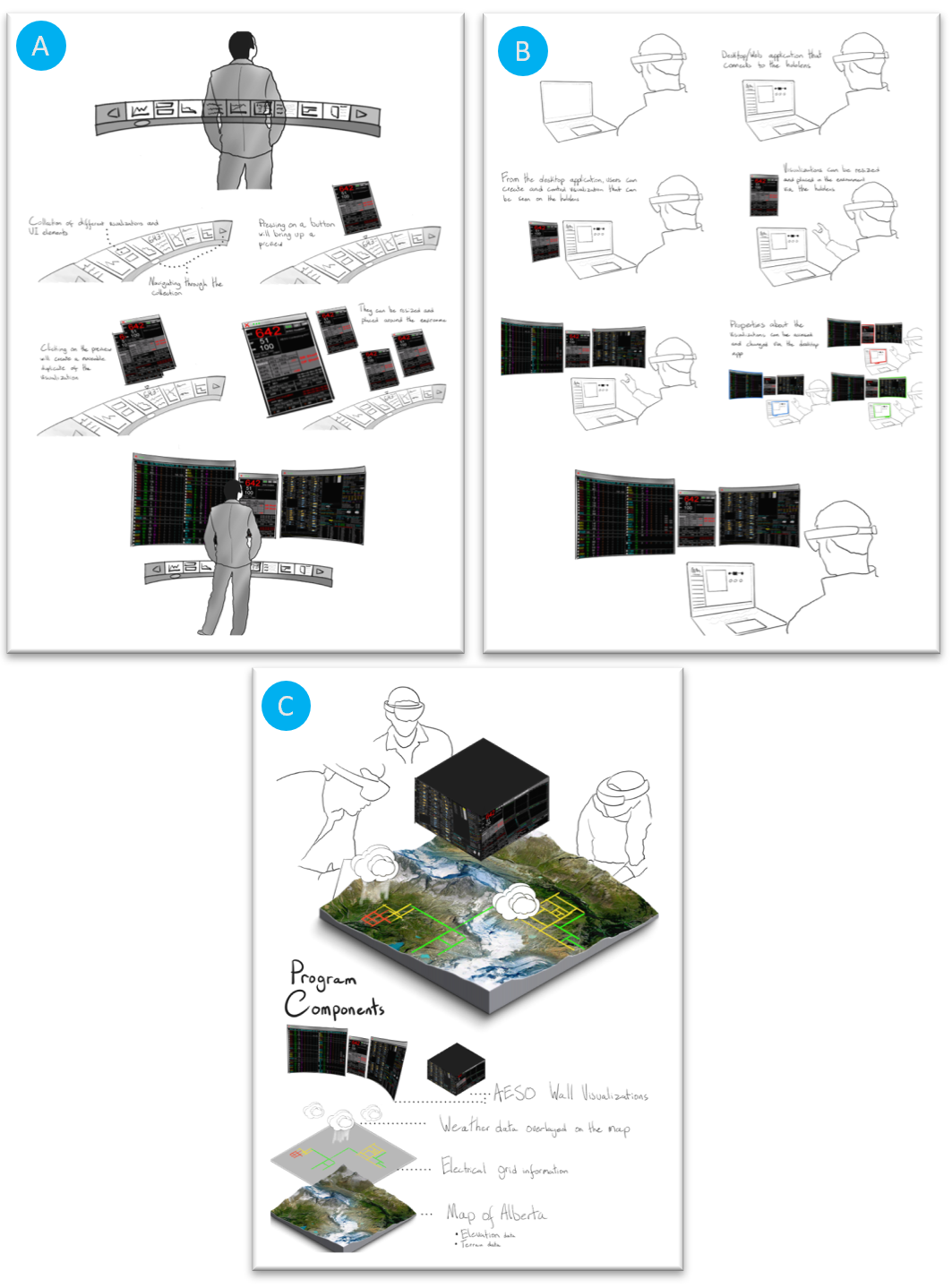

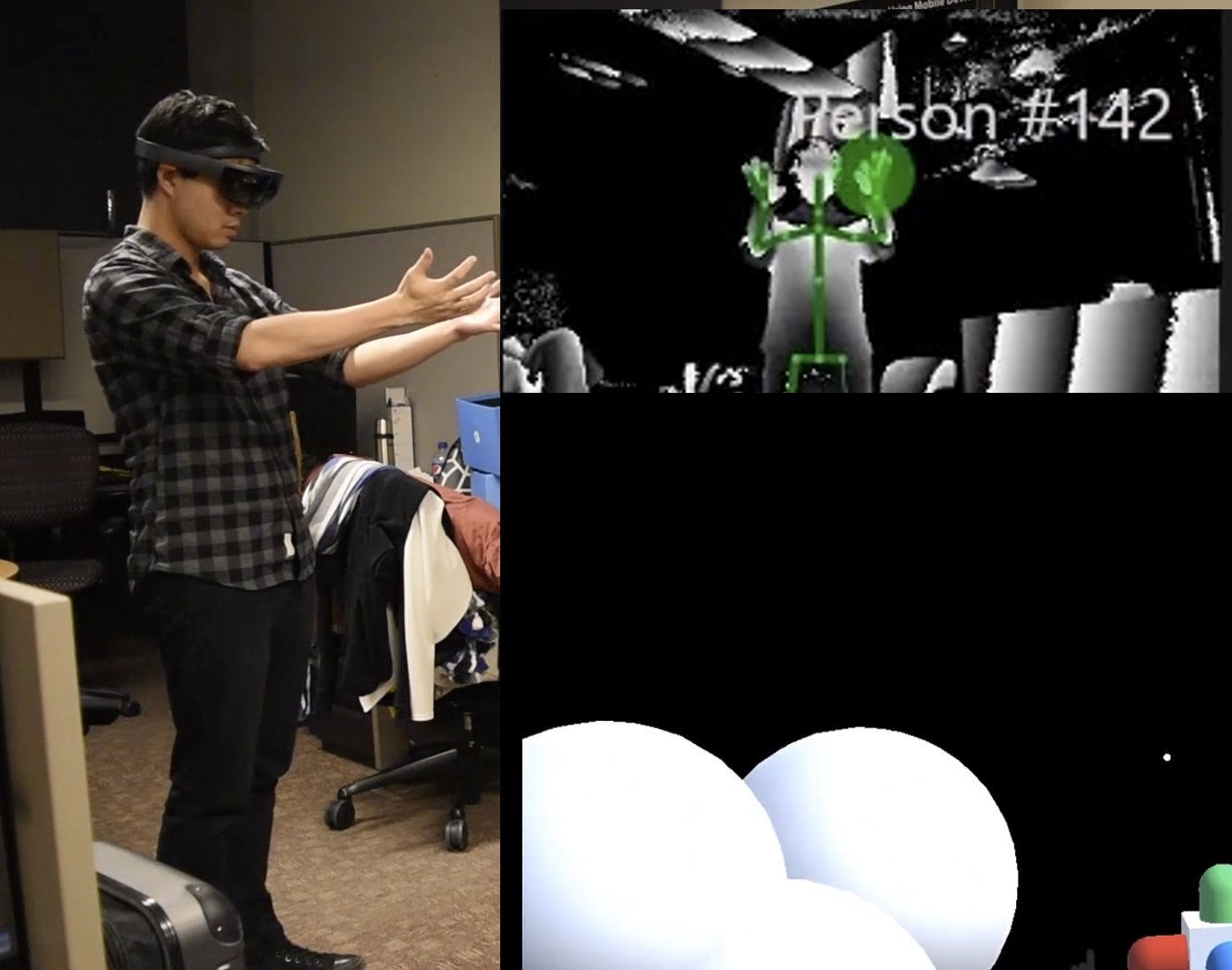

The Agile Surface Engineering (ASE) Lab (now known as SeriousXR) is a multidisciplinary research group specializing in immersive analytics, human-computer interaction, and agile software engineering techniques, dedicated to exploring and creating innovative tools and processes that transform complex information into actionable insights.

As a Researcher and Software Developer, I combined applied research with software development to create immersive applications, conduct studies, and publish papers. I collaborated with industry partners, mentored interns, and showcased our work, bridging academic research with real-world impact.

During my time, I published my paper User experience guidelines for designing HMD extended reality applications, which has received 90+ citations on Google Scholar. (Link)

At the ASE Lab, I conducted research and developed prototype applications that helped established the groundwork for applying immersive technology to real-world, data-driven decision-making.

Early Experiences.

As a Teaching Assistant, I taught computer science and data analysis. I led tutorials for DATA 201 (Excel, OpenRefine, Tableau) and CPSC 219 (Java programming, object-oriented analysis, and system design).

As a Web Designer at Instimax, I created custom WordPress websites, managing design, client consultations, and graphics to deliver cohesive online solutions.

Education.

Master of Science, Computer Science

Bachelor of Science, Computer Science

Side Projects

I’m naturally curious, and projects give me the chance to explore new ideas, experiment with emerging technologies, and develop new skills. By quickly turning concepts into prototypes, I can uncover insights that shape how solutions are designed and delivered, often evolving into more refined projects and ideas.

Digital Field Worker: Realtime HoloLens Visualizer

We want to set the Digital Field Worker of the future free of serial cables, diagnostic ports and telnet!

The Digital Field Worker of the future will be required to diagnose and repair complex, expensive, heavily instrumented equipment. Existing solutions such as HoloLens Guides and Remote Assist are extremely helpful. But what if we could also infuse real-time equipment data and the ability to interact with that equipment into a Holographic application?

By adding real-time data, we empower the Digital Field Worker to read equipment trouble codes, see real-time performance data, power off equipment, reset equipment, and interact with the equipment all through the HoloLens, even when disconnected from Azure!

Open source: https://github.com/mixedrealityiot/OBD-II_MQTT_HoloLens

MapLink: Real-time Phone Controller for HoloLens

Traditional map applications can be limiting when working with complex spatial data. Navigation on a small screen doesn’t always convey scale, context, or depth, making collaboration and decision-making harder.

This project addresses that challenge by linking a HoloLens 2 headset with a mobile app running the same map. Any changes made on the phone, such as panning or zooming, are tracked and sent through SignalR to update the 3D version of the map in real time on the headset. This approach allows users to interact with maps in a familiar way on their phone while unlocking a richer, more immersive 3D view in mixed reality, making spatial information easier to understand, share, and act on.